Last week we focused on AI at work. Our research led us to estimate that only around 20% of information workers are using AI regularly (more than 3x a week). That percentage seems both low and high depending on your perspective. Low considering just how much the technology has been hyped. High in that generative AI has only been around for about 3 years.

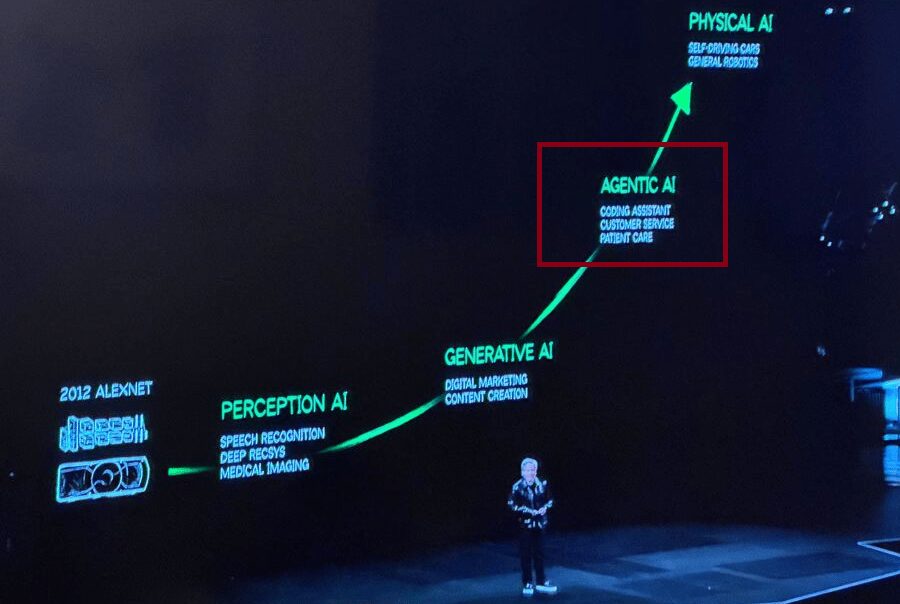

But before most companies even reach generative AI adoption, we’ll probably be deep into a new phase. That of ‘Agentic AI.’

Speaking at CES in January, Nvidia CEO Jensen Huang showcased the phases of AI development.

The most common way of people using AI at work is via a chatbot like ChatGPT. But while chatbots can be transformational, they might also be characterized by a common limitation - these platforms are all reactive by nature, responding to human input rather than acting on their own. The next step for AI looks likely to entail a transition from prompt-defined reactivity to relatively autonomous proactivity.

The notion of AI autonomy might strike a note of dystopian alarm but, for the time being at least, it arrives in the guise of relatively benign workflow automation platforms. A new wave of AI development is shifting the focus from assistants to agents - AI systems that don’t just respond to instructions but proactively plan and execute tasks independently. Instead of asking an AI to write an email, an AI agent could identify important messages, draft responses, schedule meetings, and follow up automatically - all without direct input.

This shift is likely going to be the biggest since the language models first arrived. Businesses are already experimenting with autonomous agents that can write code, analyze data, and even operate as virtual employees. It’s clear that a new era, in which AI is expected to do more than just answer questions, is already upon us. But how close are we to truly autonomous AI? What can these agents do today, and what challenges still stand in the way?

What are AI agents?

Considering its pervasiveness as a buzzword in the last year or so, it sometimes seems like ‘agent’ is a hazily understood concept.

An AI agent is perhaps best understood in relation to an AI assistant, which can be broadly described as a reactive system that responds to user input, providing answers, suggestions, or automation only when prompted. An AI agent, on the other hand, is a proactive system that can plan, execute, and iterate on tasks independently, often making decisions, learning from feedback, and adapting without constant human supervision.

One of the most interesting examples right now is Devin AI by Cognition, which is being trumpeted as the world’s first AI software engineer, capable of writing, debugging, and deploying code with minimal human input. Unlike AI coding assistants like GitHub Copilot, which suggest snippets, Devin can autonomously plan development tasks, test its own work, and even fix errors - bringing us closer to fully automated software development.

Boardy is a conversational agent that connects you to like minded business people. We recommend dropping it your phone number on LinkedIn - it’ll call you and have a 6-7 minute chat about your business, then make recommendations.

In the world of workflow automation, platforms like AutoGPT allow users to create self-directed AI agents that break down complex goals into sub-tasks and execute them step-by-step. Businesses are using such tools for everything from automated market research to customer outreach, where AI can gather insights, draft personalized emails, and schedule follow-ups without human intervention.

Meanwhile, AI-driven multi-agent frameworks like CrewAI enable multiple AI models to collaborate, mimicking human teams by delegating tasks between specialized agents. E-commerce and customer service are also seeing the rise of AI agents with companies deploying autonomous chatbots that go beyond scripted responses - handling real-time support, processing refunds, and even negotiating contracts. In finance, some hedge funds are experimenting with AI trading agents that analyze market conditions, execute trades, and adjust strategies on their own, responding to trends faster than human analysts ever could.

Opportunities and obstacles

These real-world applications are made possible by advancements in memory systems, reinforcement learning, and planning algorithms, allowing AI to persist across multiple tasks, learn from outcomes, and refine its approach over time. While still in its early stages, this shift from assistants to agents represents a major leap in automation - one that has the potential to reshape entire industries.

Nonetheless, while such advances are being rolled out at the accelerated speed we’ve come to expect in recent years, it would be premature to suggest that there aren’t significant obstacles to be reckoned with. Most obviously, agentic systems often fall short of true automation because, for the time being at least, human supervision is typically still required.

It’s often observed that agents can struggle with long-term planning and error correction. Unlike humans, they lack true reasoning abilities and can misinterpret tasks or fail to adapt when faced with unexpected situations. A single hallucination or incorrect assumption can derail an entire workflow - imagine an AI agent tasked with researching a market trend that misinterprets data and builds an entire report on false premises.

Memory limitations are also cited as a limiting factor - most agents can’t track context effectively over extended interactions, leading to inconsistencies or redundant actions. Security is another concern, as autonomous agents, if not carefully controlled, could be exploited for misinformation, fraud, or unintended system manipulation.

While ongoing development is addressing these weaknesses, today’s AI agents are still best used as co-pilots rather than fully independent workers – they’re capable of handling tasks (relatively) proactively, but not yet ready to replace human oversight.

Despite such limitations, AI agents are improving rapidly. Researchers are working on longer memory retention, better reasoning models, and multi-agent collaboration, all of which promises to make them more reliable and adaptable.

Perhaps the future of AI agents isn’t just about automation - it’s about delegation, where systems handle complex workflows while humans provide strategic oversight. As these agents take on more responsibility, questions of trust, alignment, and control will become even more critical. The shift from assistants to autonomous agents is already underway, but for now, the best AI agents aren’t those that replace humans - they’re the ones that work alongside us.