I’ve long been a bit flummoxed with the seeming obsession with ChatGPT. I guess it’s because it was the original AI chatbot, and thus gained a stunning first mover advantage until Claude and Gemini (first Bard), came around.

As my own AI usage has grown, I’ve actually stopped subscribing the ChatGPT for my own company in preference to Gemini, because it’s included in the price for my Google Workspace and seems more than capable. I also do use ChatGPT daily as it’s provided by a client… so I can compare them pretty well.

GPT 5 has taken a step ahead for complex reasoning tasks, but even then it’s left me a little underwhelmed with it taking 10 minutes on things like spreadsheet comparison, only for it to get it wrong. I can be quite rude to ChatGPT at times.

Fiction and non-fiction writing

But one recent project was unusual but intriguing. We wanted to test out how to build an AI workflow for creating short stories and longform non fiction YouTube scripts. This is a challenging writing set, with all sorts of tonal guidelines and instructions to input.

The question was whether we could use a database of variables to write short stories via an automation. It’s long crossed my mind, having written a novel - is AI really capable of good written creative at length work?

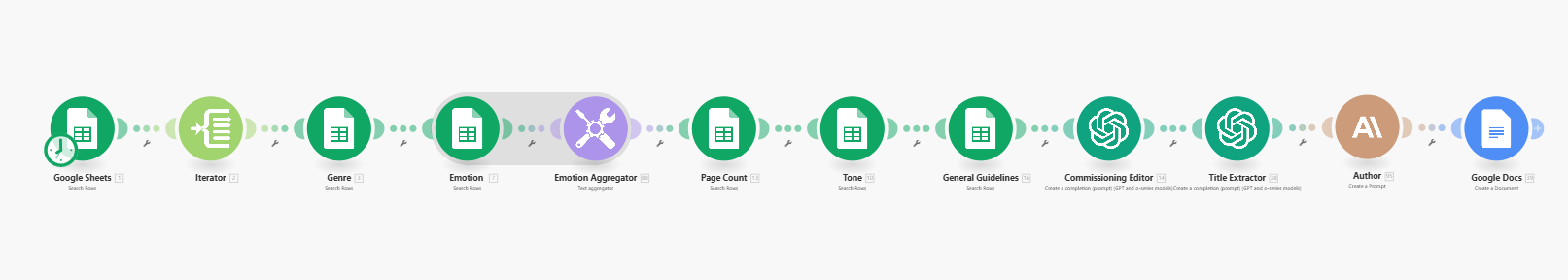

The first iteration was, in hindsight, a bloated mess of routes. Each of the below is an OpenAI API call in Make.

The green OpenAI icons represent a writing task, and my theory was that we’d need AI to write in five acts for longer stories in a kind of ‘agent relay’. I’ll be honest, it couldn’t really do it. Story continuity basically fell to bits.

To get this more refined, I went down to fewer agent steps - one a story summariser, another a writer. But to my frustration OpenAI’s models could not get beyond around 1,200 words in one completion. It wasn’t nearly enough, and while passing the baton on in relay got us over the finish line, some stories just cut off, or still had continuity issues.

GPT 4o kept failing, but I held out for GPT 5. When it arrived I thought it would easily be able to solve the previous problems. But it couldn’t. Its completions had exactly the same problems.

Enter Claude Opus 4.1

Someone suggested Claude. I’d certainly seen that Anthropic’s models had positive reviews for writing and coding, but I’d never really thought I wanted to add another chatbot to my 2x stack. I’d have to figure out its API as well, which appeared tedious.

So I felt we needed to test out Claude despite my lack of experience with it. Claude’s most widely used model is Sonnet, but it has a more powerful one - Opus 4.1. I thought I’d try it out.

Confusingly, when using Opus 4.1 in an agent workflow, the outputs remained at 1,200 ish words.

I tried out the model in Claude chat, and completed a 3,500 word story in around a minute. This was great news. I switched the workflow from agent to a Claude chat, and voila, we had an output that matched my intentions.

Simplifying the crazy workflow above yielded positive results.

What accounts for an agent being unable to fulfil the task while a chat can? ChatGPT said it was because agents go through much more processing and knowledge lookups, meaning it may disregard part of its instructions. Simplified chat requests can for some reason match word count much better.

Was Claude a better writer?

Over a few weeks, I ran 20+ comparative tests between GPT 5 and Claude Opus with third party judges. I did this in fiction and non fiction scenarios. Opus won in all cases.

GPT 5 is an overcomplicator. In direct chat it's great, and I still use it more than any other chatbot. But in a workflow for writing I've found it like an intern. It chooses to go off on tangents, like writing in first person present (what?). It was also hollow. The soulless AI trying to be creative.

In non-fiction, it made the text so strangely academic that it was basically useless as a YouTube script. I had to come up with a list of banned words, but still it would not follow orders.

Claude Opus, meanwhile, was on point. It just read like it wasn't trying too hard.

My determination of good outputs is: Would I change much?

I've been involved in editorial production for 20 years. I review and create daily.

I read a 3,500 non fiction word output from Claude Opus and thought - all passable. There are a handful of things I'd change.

But GPT 5 was pretty much unusable.

We have a winner.