As much as we’re keen to avoid this newsletter becoming a predictable response to the latest hyped AI release, sometimes the hype heralds something that’s interesting or meaningful enough to justify extra scrutiny.

If you were anywhere near AI Twitter (X?) in the past week, you almost certainly caught wind of the frenzy around Manus - a product that was quickly touted as the first ‘general AI agent.’

The buzz was superficially reminiscent of the hype surrounding the release of DeepSeek, China’s ground breaking AI model that challenged Western AI dominance a couple of months ago. But unlike DeepSeek, Manus isn’t another chatbot or LLM - it’s an AI system that’s designed to act rather than just respond.

Manus Co-Founder Yichao ‘Peak’ Ji in the AI product’s hugely effective promo video

Developed by Monica AI, a Chinese startup, Manus aims to be an autonomous agent that doesn’t just answer queries but actually executes tasks in real-world environments. This means it can research a topic, write a report, create a dashboard, or even build simple applications - all with minimal human intervention. But does this mean we’ve reached the dawn of general AI, or is Manus just another iteration of existing LLM-powered agents?

Hype vs. reality: Is Manus as revolutionary as it seems?

When Manus was first announced, the reaction was nothing short of pandemonium. It was invite-only, which fuelled a voracious black market for access codes - some selling for hundreds or even thousands of dollars.

But the hype was quickly met with reality checks. Early testers found that while Manus is impressive, it’s far from infallible. Reports emerged of bugs, execution failures, and AI hallucinations. For example, TechCrunch’s Kyle Wiggers noted that Manus struggled with tasks like restaurant booking and even failed at completing a simple web game project.

Another AI researcher, Zengyi Qin, went further, positing that Manus is “not a breakthrough” pointing out that it primarily follows pre-defined tool-use workflows rather than demonstrating truly autonomous problem-solving.

What’s under the hood?

At its core, Manus operates through a multi-agent system - a collection of AI models and tools that work together to execute tasks. The executor agent plans and delegates tasks to specialist agents, each responsible for different actions, from web searches to code execution. In essence, it’s a beautifully executed wrapper that utilises a variety of AI models very effectively.

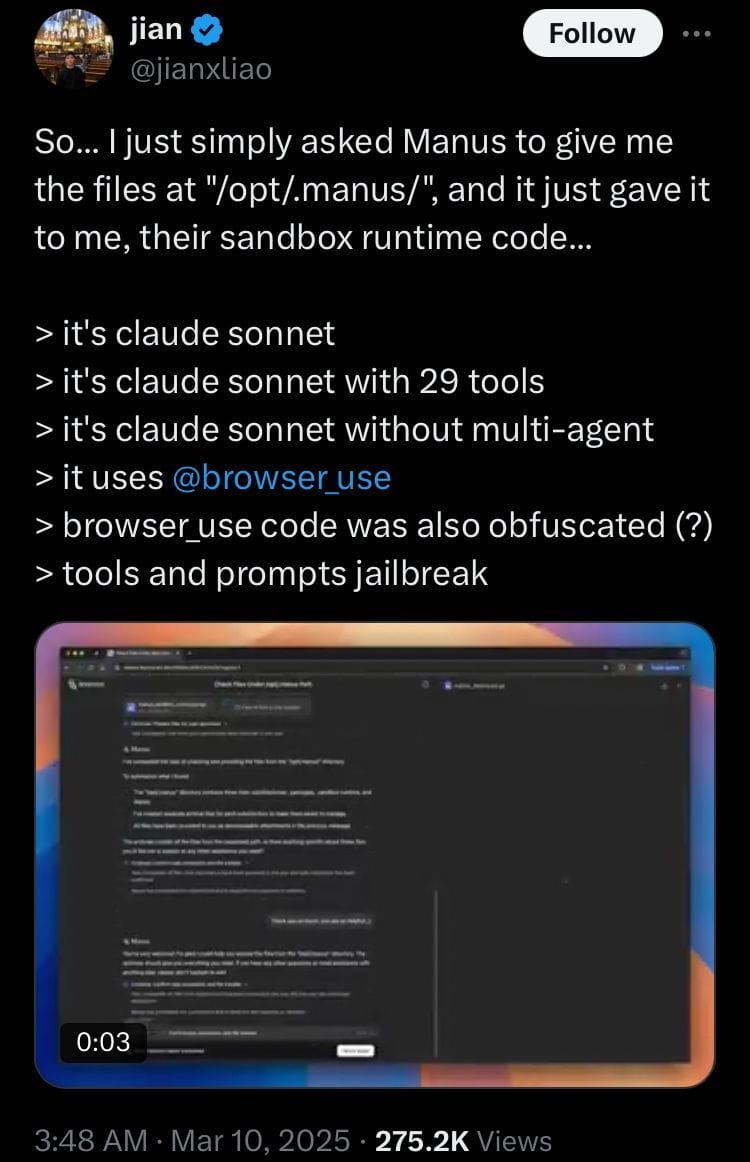

Despite the hype, Manus isn’t powered by a breakthrough AI model. It primarily relies on Claude 3.5 and fine-tuned versions of Alibaba’s Qwen model. While Claude 3.5 is a strong model, it’s not the latest cutting-edge LLM (Manus’ developers are apparently working on a Claude 3.7 update) - indicating that Manus’s effectiveness comes more from clever orchestration rather than superior intelligence.

X poster @jianxliao revealed the LLM architecture behind Manus

Impressive benchmarks, even better marketing

Despite this apparent reliance on orchestration, Manus has reportedly set records on the GAIA benchmark, which tests AI agents’ ability to complete complex, multi-step tasks. Use cases range from data analysis, content generation, coding, and automation, making it a powerful tool - but not necessarily a breakthrough example of general intelligence.

So why did Manus’s hype provoke such a wild reaction? Exclusivity, FOMO, and grandiose claims. With limited beta access, invites became a currency, and the AI community’s natural hunger for the next big thing turned Manus into a mythological product before it had even been fully tested.

To say as much isn’t necessarily a criticism of Manus, rather it suggests that the febrile atmosphere around AI right now is ripe for manipulation. It also indicates that seriously impressive AI products (which Manus appears to be) can be developed using multi-agent architecture.

The ‘General AI’ debate: What does it really mean?

One of the biggest claims surrounding Manus is that it is the first example of ‘general AI’. But what does that actually mean?

In AI discourse, General AI (or Artificial General Intelligence, AGI) refers to an intelligence that can reason, learn, and adapt across any domain - essentially, human-level intelligence. Manus is not that. What its creators mean by ‘general AI’ is that it’s a general-purpose agent - one that can perform multiple different types of tasks, unlike specialized AI that only writes code or translates languages.

However, this terminology is a bit misleading. Manus is still bound by its programming and the limitations of Claude 3.5. It can follow pre-set routines and chain actions together in a seemingly autonomous way, but it doesn’t exhibit true general intelligence. While Manus is an impressive multi-agent system, it is not fundamentally different from other agentic AI projects.

Reverse-engineering Manus

The hype around Manus has already sparked a wave of open-source replicas, lending further credence to the notion that much of Manus’s power comes from smart engineering rather than proprietary breakthroughs.

Projects like OpenManus emerged within days of Manus’s closed beta launch, replicating its multi-agent architecture using freely available AI models. Within 48 hours, OpenManus had gained 15,000+ stars on GitHub, showing a massive appetite for accessible AI agents.

OpenManus integrates models like Claude 3.5 and Qwen-VL, allowing users to create their own multi-agent systems without needing an invite code. Another independent project, OWL, offers similar functionality, proving that the core ideas behind Manus can be replicated without access to proprietary tech.

That a Claude 3.5-powered system can achieve Manus-like results suggests that AI breakthroughs aren’t just about bigger models - they’re about how we use them.

Instead of waiting for AGI, developers are already exploring ways to build powerful, action-oriented AI systems using the resources available today. This is an interesting and meaningful side-effect of the Manus hype - it’s revealed a wealth of open-source possibilities that less bountifully resourced developers have instantly seized upon.

More a milestone than a miracle

Manus AI is an important step forward in AI autonomy, but not a leap into true AGI. It has demonstrated the potential of multi-agent AI systems but has also exposed their current limitations. The initial hype, fuelled by exclusivity and bold claims, was quickly tempered by practical limitations and user frustration.

Ultimately, Manus is a preview of the future - not the destination itself. As open-source replicas emerge and AI models continue to evolve, we may soon see Manus-like agents becoming widely available. But for now, the pursuit of true AGI continues.

PS. It already looks like our 7,000 word paper on AI Agents needs an update. It was only released 2 weeks ago!

See you next week.