Video editing is an extremely time intensive task. Thankfully, the release of numerous generative AI tools over the last couple of years have made the more tedious aspects of video editing much more seamless.

Certain tasks like multi-camera editing, clipping different aspect ratios for social media and adding subtitles can all largely be automated, which enables video editors to focus on more advanced aspects of their role.

Our process of using AI in media production demands that we select one or two apps and tools to do a specific task, and not have a confusing list of applications that do similar things. We also test tools out rigorously, using them in our own production process – so this list is based on actual experience of using the tool.

Also, we will not be claiming Adobe Premiere with Adobe Firefly or DaVinci Resolve are specifically ‘AI applications.’ They are video editing applications that can be enhanced by AI plugins or in browser tools. We expect video editors to be already using one of them.

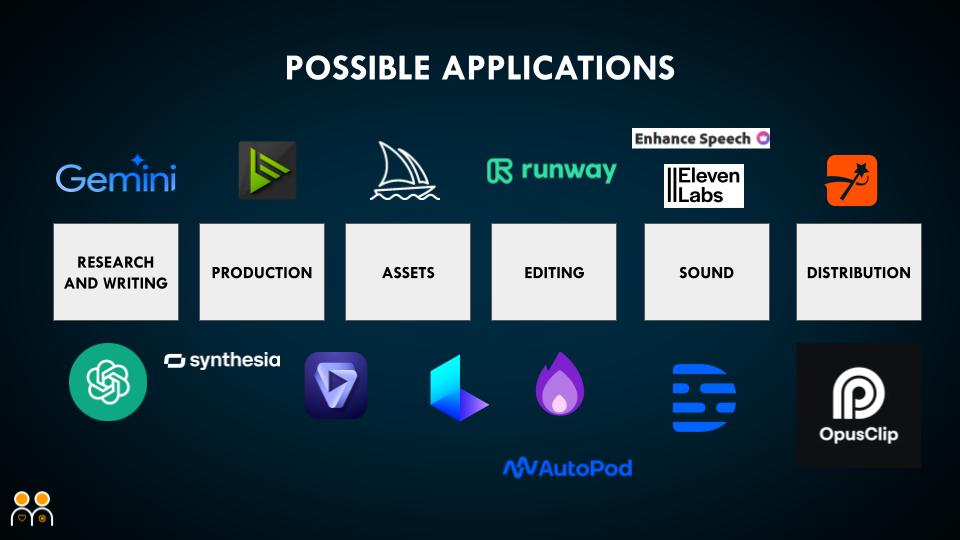

From the above collection, we recommend editors have access to at least some of the following, and we will explain why in the more detailed review of the applications below.

Image generation: Midjourney

Image and footage upscaling and finessing: Topaz Labs

Generative video: Runway and Luma Dream Machine

Fast background removal: Runway

Quick cutting: Firecut, AutoPod and OpusClip

Sound editing: Eleven Labs

Subtitling: Firecut and Submagic

Why do you need AI applications?

Ultimately it depends what you specifically do, but in our experience using a number of these applications in a video editing process can save time. We’ll describe the context where we have found them useful in the descriptions.

Midjourney: Image generation

AI image generation will be not needed for a lot of commercial video editors. For instance, if working for a client with tight brand controls its unlikely AI images will be freely allowed in an edit. However, AI image generation can be very useful, and can speed up the editing process can generate missing assets themselves.

An example is if an editor needs a graphic, character or scene that is not easy to visualise with existing assets or stock libraries. Say, for instance, they are doing a short video about a historical or fictional character for whom no visual assets exist, then AI can make a representation quickly. Reimagining portraits such as Frida Kahlo above is also a major strength.

For this part of the workflow, we recommend Midjourney for its overall ease of use, price and outputs. Having tested it against DALL-E 3, we’ve found the following comparisons:

- Midjourney has a consistently high level of output without the need for much technical prompting. DALL-E is technically easier to prompt, but its output is not is good as Midjourney.

- Midjourney is low cost, starting at $10 month. DALL-E is only available via a ChatGPT subscription starting at $20.

- Midjourney requires access to Discord and some knowledge of its prompting parameters (these are not too far off basic Excel formulas). However, when used correctly, there are a lot of options like consistent character and seed generation that can be invaluable.

By default, Midjourney exports at 1024×1024 px, which is a bit low res for video projects, and usually the wrong aspect ratio. You can use the –ar parameter to change the aspect ratio however you like, while Midjourney also includes its own upscaling tools which can upscale to 2912px wide. However, using these images in a 4K project can still be problematic – but fortunately our next recommendation has a solution.

Topaz Labs: Image and footage upscaling and finessing

There’s a few slight accessibility problems with Topaz Labs Video AI in that it costs an upfront fee of $299, and requires a desktop app to run. Both of these may appear prohibitive in companies of a certain size, because of IT approvals. It’s main function is to use AI to significantly sharpen or upscale footage or images (although you’ll need to pay £199 additional to get it working with images).

These upscaling features can seriously fix a shoot or other production process gone wrong, but also enhance usable footage that still contains noise and adding detail. With these sorts of capabilities, its possible the application will pay its high upfront cost in the editing of one video.

A lot of older archive footage also is not of particularly high quality and at lower aspect ratios that can make it look terribly pixelated at a 3840×2160 4K aspect ratio. Topaz Video AI can transform such footage, so it can be extremely useful in documentary production.

Significantly upgrading footage using Topaz requires some heady processing power – but given this is an article for video editors, we expect you’ll have that already. However, even with a high spec graphics card and a lot of memory, the upscaling process takes minutes rather than seconds.

Runway and Luma Dream Machine: Generative video

OpenAI announced SORA in February 2024 and essentially the entire video production industry’s jaw dropped. However, by mid 2024 is no firm release date and Luma Dream Machine and Runway are both become available.

While its possible to do some amazing things in these applications, in our view it’s still very early in the days of generative video, and we query its current feasibility it most commercial projects. If you’re an AI enthusiast, then you may have come across some stunning examples floating around on social media – none less than the SORA demo content. This Volvo ‘ad’ has been doing the rounds.

This example uses the July released Runway, and it is certainly impressive, but it’s also not likely to ‘replace’ a finished video overnight. As a concept video to test ideas, it’s fantastic, and that’s the primary use of generative video at the moment. It is not the finished article.

Luma Dream Machine was also released in summer 2024, just before Runway, and we encourage editors to embrace the technology and play around with it. It’s very possible it’ll come up with something usable – but will it consistently beat a clip library like Envato Elements or Storyblocks? We feel that there’s a long way to go before generative video is consistently useful against existing stock clip libraries.

Both Runway and Luma are free to use on general release for a limited amount of prompts. To have another iterations to produce a short film (say 3 minutes) you will need to pay for credits.

Runway: Fast background removal

Okay, we’re talking to editors here, so we expect most of our audience has experience using After Effects’ features like keying and rotoscope. If you’re already using this kind of workflow, then perhaps Runway’s remove background / AI green screening doesn’t matter – but it is quite a remarkable feature.

Using Runway you can essentially isolate any object in a frame and remove the background or its entire setting. This is amazing for hackier producers who aren’t familiar with After Effects, but its also a bit more accurate than rotoscoping for more complex scenes. The feature is available on a standard plan, which is just $15.

Overall, we don’t think in browser editing is nearly as effective as using a locally run application like Adobe Premiere, and Runway isn’t really a replacement for these. However, the low price for this feature can make it a useful part of an editor’s toolkit.

Quick Cutting

We actually have three tools to run through here, and they all do slightly different things. Firecut and AutoPod have various features that can in theory speed up editing time, but we’ve found some drawbacks with each.

Firecut

This is a Premiere Pro plugin that claims to speed up the editing process through being able to cut silences and repetitions, while also being able to add B-Roll and subtitles. Over our testing, we found the cut silences feature somewhat counter intuitive, as an editor would then have to go back into the timeline and ‘check’ the AIs work – and often fix it!

Further, the B-Roll feature is not really viable. We gave it a simple topic to process (a general story about Britain) and 8/10 added clips were useless. That said, it did save a lot of time in placing 2 clips – possibly up to 10-20 minutes vs doing it manually.

Overall, with the features that Firecut is really selling, it feels like the product has actually been in Beta for quite a while. However, the subtitling feature is remarkably good vs. our other testing (see its comparison to Submagic below). Focusing on the editing without captions, it feels like a tool that’s got a huge amount of potential, but just isn’t there yet.

AutoPod

By contrast to Firecut’s slightly underwhelming performance vs. standard editing, AutoPod has a feature that can make an editor’s jaw drop. Simply put, if you’re in the came of editing multi camera interviews or podcasts, you must try Autopod, and the individual price of $29 a month justifies itself very quickly.

Indeed, Autopod is one of those tools that truly puts the tedium of editing into the dustbin. It can take two recorded camera tracks, identify which one is speaking and cut accordingly and automatically. I remember seeing Colin and Samir’s reaction to it when it first came out – they were stunned. Likewise, in editing teams where we have worked, there’s been universal praise for the feature.

Autopod also has a a social clip creator, transforming longer form footage into social clips quickly and a jump cut editor to remove silences.

OpusClip

OpusClip is exceptional at solving one problem that most editors hate – reformatting videos in multiple aspect ratios with captions for social media. If you don’t need to a multi camera AI editor then we think this is a better choice than AutoPod for social clip creation as it is slightly cheaper on the starter plan. The pro plan seems to be on offer quite a lot, so it remains a bit cheaper than Autopod.

Eleven Labs: Sound editing

Eleven Labs is primarily a voice over tool, but has also added AI generated Sound Effects, which editors could use in preference to a stock library. For voice over, it’s outstanding, with numerous AI generated voices that can be brought to life via a simple text input. You can choose from hundreds of different voices.

Video will primarily still use human voiceover, but if the narrator needs to change, for example in the reading of source material, then Eleven Labs can fill the gap. It’s not always perfect and some of the pronunciation may need a bit of tweaking, but you can do this through writing phonetically and using parameters, which also allows adding pauses.

Professional voice cloning is particularly useful if you need to fix some voice over, or input voice over where the original narrator is not available. You will need permission to clone someone’s voice, so it’s not particularly useful if using a third party to narrate your video and they don’t want their voice cloned, and it needs a few hours worth of training material. However, when all of these things line up, using an AI voice cloning can speed up elements of the editing process.

Subtitling

Subtitling video was previously quite a time consuming task that required a specific editing skill. To subtitle a 1 minute video manually could take between 30 minutes to an hour, but with these tools this time can be dramatically decreased. There are numerous subtitling apps available, but we’ve narrowed down to two tools that have quite different benefits.

Submagic

Submagic is a web app that requires an editor to upload a video for it to be subtitled. The process of uploading, getting the AI to subtitle and then exporting takes about 15 minutes for a 1 minute social clip, which is not the quickest, but it’s much better than using the in built tools on Adobe Premiere unless you have a bespoke workflow already setup.

The subtitling itself definitely needs tweaking after upload, along with timing, but the presentation and choice of styles is excellent and very social media friendly. Because of the upload and downloading time, it’s a tool best suited for more casual workflows that require under 20 videos to be subtitled a month for a good price.

Firecut

Firecut is a more advanced subtitling tool than Submagic, and while we’ve noted that some of its features are currently a little clunky, it’s subtitling is excellent. It’s also more efficient because it’s an Adobe Premiere plugin. With a video already in the timeline, you can use Firecut’s subtitling tool to rapidly place subtitles in a range of styles. Thus if you need to subtitle video en mass, or much more than 20 videos a month, it’s a superior tool to Submagic.

The transcription is incredibly accurate, while being able to edit the captions directly in the timeline can justify the slightly high $40 monthly fee (when including VAT). You also have a lot more export options in terms of animation and styling.